Gigamon and its partners Splunk and Phantom demonstrated improvements to network security at NFD16. APIs and integration between products from different vendors will be playing an increasing role in network security.

Network Packet Flow Analysis Without Negative Impact

Gigamon is known as a packet broker product. It is a network tap that monitors network traffic and forwards the traffic to network management and network security tools. Gigamon, Splunk, and Phantom used this session to tell us about an integration between their products to increase network security.

Ananda Rajagopal of Gigamon kicked off the session by reviewing their model of handling network security. (See the recordings at Gigamon Presents at Network Field Day 16.)

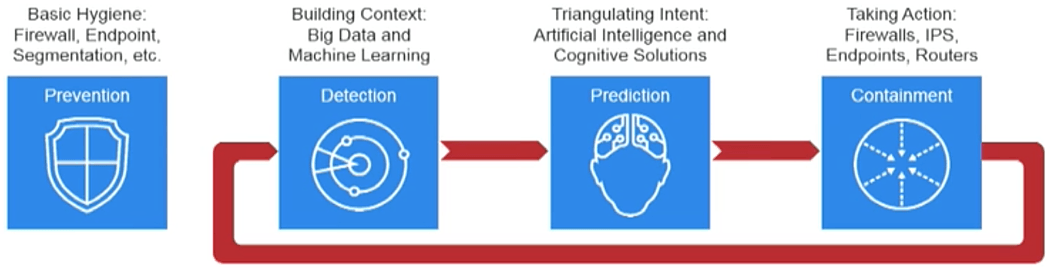

Gigamon’s starting premise is that preventing all security intrusions is impossible. I think that’s a realistic premise. To prevent all attacks, you have to cover 100 percent of your IT system’s vulnerabilities. That’s simply not possible. The approach that several vendors have taken is multipronged. Gigamon calls its system The Defender Lifecycle Model. It consists of prevention, detection, prediction, and containment (see graphic below).

Prevention is the standard function of applying basic security best practices to the network. Detection should be obvious—identifying threats, as they occur. The word prediction implies that it predicts a vulnerability; I think of it as simply the step between detecting a security event and the containment of that event. You can also think of it as the step that predicts what systems, protocols, network devices, and links will be affected. Finally, containment is the step of responding to the threat and taking actions to restrict or eliminate the threat.

Ideally, the sequence identified by the red arrows would run in near real-time such that, as a threat is detected, the affected systems are identified and containment actions are taken. Of course, automation is required to make it run in near real-time — particularly in the Gigamon model where some of the functionality is performed by products from other vendors.

Gigamon’s role in this model should now be clear. Detecting a threat is done by performing big data packet flow analysis. The source of the data is from Gigamon tap infrastructure. Instead of forwarding full packets, it can forward a subset of the packets using either NetFlow or IPFIX format. In the presentation, you’ll hear the company refer to it as packet metadata; but it is really just full flow data feed. Using full flow data feeds is needed to perform complete network security analysis, especially when you consider that some attacks may be contained in just a few packets.

Gigamon talked about using the packet metadata for security analysis. There was some discussion about the wording. The company used the term ‘analysis,’ but my take on it was that its functionality is not so much analysis as it is extracting metadata from the packets — rewriting the data in a form that other tools can ingest. The value of a packet broker like Gigamon is that a hardware NetFlow platform can do full packet capture, not packet sampling that would result from running a collector on most network platforms.

Splunk

Splunk is expanding its scope from log analysis to a security analysis system. I don’t think this could be done through log analysis alone. It needs multiple sources of data to be a good SIEM, and data from Gigamon is just one of the sources.

An interesting use-case by Splunk was to correlate an application internal failure with an unanswered customer call, and then a subsequent complaint on Twitter. Note: It wasn’t clear how much effort would have been required to create these associations for each individual call. It also was not clear how this example fit into the security theme, other than an example that demonstrates the type of complex associations that can be detected quickly.

During the presentation, Wissam Ali-Ahmad, lead solutions architect at Splunk, positioned Splunk as the central nerve center of a security alerting system. I can see that this might be a reasonable position, given most organizations are deploying multiple security analysis tools — each with their own logging and alerting systems. Centralizing the logs and alerts would certainly be an advantage.

Phantom

Phantom is a security operations center product that focuses on reducing the time it takes to determine that a security event has occurred and to take action. The actions that the security staff would take are embodied in a set of playbooks that the automation system executes when an event is detected. The company’s system seems to be rule-based (based on Phantom’s internal description by Robert Truesdell). I wonder if it is working on machine learning technology. If not, it should.

Phantom seems to best fit into the prediction and containment phases of the Gigamon defender lifecycle model. An interesting example was to automate the process of investigating phishing email attacks.

Summary

The Defender Lifecycle Model sounds like an alternative to the Cisco Tetration data collection mechanism, covered in Cisco’s NFD16 session. What’s the difference? Gigamon relies on partners like Splunk and Phantom to do the “big data” analysis and perform actions on platforms from multiple vendors. Is it a viable alternative to Tetration? I’ll leave it up to you to decide which platform best meets your organization’s needs.