Latency, congestion and sluggish TCP stacks can plague application performance, but understanding data center interconnect technologies is key.

What factors influence the performance of a high-speed, high-latency data center interconnect?

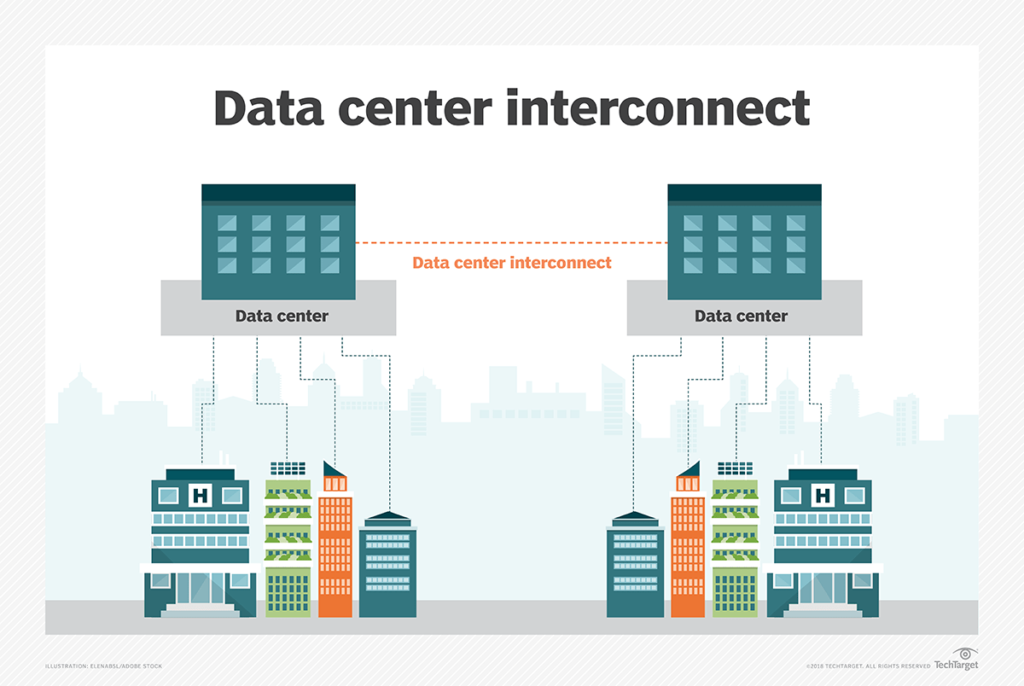

Redundant data centers are common, providing organizations with business continuity. But how far apart is too far for good, reliable application performance?

The consultancy I work for, NetCraftsmen, recently had a customer inquire about possible performance problems in replicating data with two data centers located about 2,000 miles apart. One-way latency was roughly 10 milliseconds per 1,000 miles, so these data centers were recording up to 40 ms of round-trip latency between them. Can the customer expect the data replication system to function well at these distances? Let’s look at some of the key data center interconnect technologies that influence application performance.

First, a little history: Top WAN speeds just a few years ago were 100 Mbps; it is now common to see 1 Gbps WAN links, with some carriers offering 10 Gbps paths. Conceptually, it seems if you need better application performance, increasing the WAN speed by a factor of 10 — 100 Mbps to 1 Gbps or from 1 Gbps to 10 Gbps — would result in a tenfold increase in application performance. But that rarely occurs. Other factors limit the performance improvement.

Latency, congestion can plague DCI strategies

Bandwidth Delay Product and What it Signifies

One consideration among data center interconnect technologies is the amount of data that is buffered in a long-delay network pipe. I will skip the detailed explanation of how TCP works,as there are many good explanations available. The brief summary is TCP throughput is limited by the round-trip latency and the amount of data the receiving system can buffer.

One way to measure TCP performance is the bandwidth delay product (BDP), which gauges how much data TCP should have at one time to fully utilize the available channel capacity. It’s the product of the link speed times the round-trip latency. In our example above, the BDP is about 5 MB — 0.04 seconds x 1 billion bps/8 bits/byte — for full throughput.

Both the sending and receiving systems must buffer the 5 MB of data required to fill the pipe. At full speed, the systems must transfer 5 MB every 40 ms. Older operating systems that used fixed buffers had to be configured to buffer the desired amount of data for optimum performance over big BDP paths. These older operating systems often had default buffers of 64 KB, which resulted in a maximum throughput of about 1.6 MBps over the 2,000-mile path. Fortunately, more modern operating systems automatically adjust their buffer size for good performance.

The wide variation in throughput over large BDP paths means you should understand the sending and receiving systems and their configuration if you need optimum performance.

One way to increase throughput is to reduce the round-trip latency. However, there have been a number of research papers that describe system architectures where distributed data storage can be nearly as effective as local storage. This is quite different than the prior thinking about locating data near the systems that will use it.

Taking a Look at Some of the Technical Obstacles of Data Center Interconnect Technologies

Of course, other factors associated with data center interconnect technologies may come into play. Among them:

Latency. Low latency is important for applications that use small transactions. The round-trip times are more critical than the overall time as the number of packet exchanges increases. An application that relies on hundreds of small transactions to perform a single user action would exhibit good performance in a LAN environment, with latencies between 1 ms and 2 ms. However, performance would degrade in an environment where those actions are run over a 50 ms round-trip path, and transaction time might take five to 10 seconds.

“Conceptually, it seems if you need better application performance, increasing the WAN speed by a factor of 10 would result in a tenfold increase in application performance. But that rarely occurs.”

It is easy to overlook latency, particularly where the application comes from an external party and you don’t know its internal operation. I recommend validating an application in a high-latency WAN environment or with a WAN simulator before you plan to migrate processing from a LAN to WAN environment.

Shared capacity and congestion. Most WAN carriers provide an IP link with the assumption you won’t be using all the bandwidth all the time. This allows them to multiplex multiple customers over a common infrastructure, providing more competitive pricing than if it were a dedicated circuit. However, this means there may be times when the traffic from multiple customers causes congestion at points along the path between two data centers. Some packets must get delayed or dropped when the congestion is greater than the amount of buffering available in the network equipment.

Make sure you have a clear understanding of the committed information rate in your WAN contract. You may need to implement quality-of-service configurations to shape and police traffic to drop excess traffic before it enters the WAN.

This problem becomes greater when the path is over the internet. There are clear internet congestion effects coinciding with peak usage that occurs locally at lunchtime, after school and in the evening. Data paths spanning multiple time zones may experience longer congestion times as the peak usage migrates from one time zone to the next.

SD-WAN may be able to help with overall throughput for some applications. These products can measure the latency, bandwidth and packet loss of multiple links and allow you to direct different types of traffic over links with specific characteristics. For example, important application traffic can take a reliable MPLS path, while bulk data uses a high-speed broadband internet path.

TCP congestion avoidance. A significant factor in TCP performance is the vintage of the TCP/IP stack, particularly the congestion control algorithm. Congestion can occur in network devices where link speeds change and at aggregation points. For example, we once had a customer with a speed mismatch between its 10 Gb LAN connection and a 1 Gb WAN link. More modern TCP/IP stacks would have handled the congestion better and achieved higher throughput.

Understanding Data Center Interconnect Technologies

It is good to understand how key applications will use the network and how additional latency will affect their performance. In some cases, an alternative deployment design may be needed. This means network engineers need to be familiar with all of the concepts noted above and, more importantly, be able to explain these issues to others who may not be familiar with the application performance issues.

One parting thought: Technological developments such as software-defined networking, intent-based networking and machine learning may ease some data center interconnect issues. But they do not change the laws of physics that govern the performance limitations of high-speed, high-latency data center interconnects.

To read the original blog post, view TechTarget’s post here.