Pluribus did a nice job presenting at #NFD16. Their product was new to me. I’m a structured knowledge kind of guy, so I’ve been trying to figure out where to fit them into my mental schema.

VXLAN and Fabric Products

The high level is easy: fabric management products. The two leading ones are Cisco’s ACI and VMware NSX. Both are controller-based. Both can do policy. Apstra: controller, no policy.

Both ACI and NSX do VXLAN, but the approaches to VXLAN do not interoperate. I’d describe both their approaches as controller-based VXLAN tunneling over an L3 underlay — they don’t interoperate because there’s an issue of who’s in control and tracking VTEP/MAC address information. To me, the key is ARP or equivalent, and how the fabric knows where a device is located — as in which port on which VTEP (VXLAN tunnel endpoint).

Contrast Cisco VXLAN/EVPN, which has no controller but does MAC/IP learning via MP-BGP. Apstra: not clear. Do they do BUM forwarding or anycast gateway? I haven’t run that information down yet.

So now we have a framework to try to fit Pluribus into. Hopefully no folding, spindling, or awkwardness involved. Let’s get to the subject of this blog (at long last?).

Focus on Pluribus

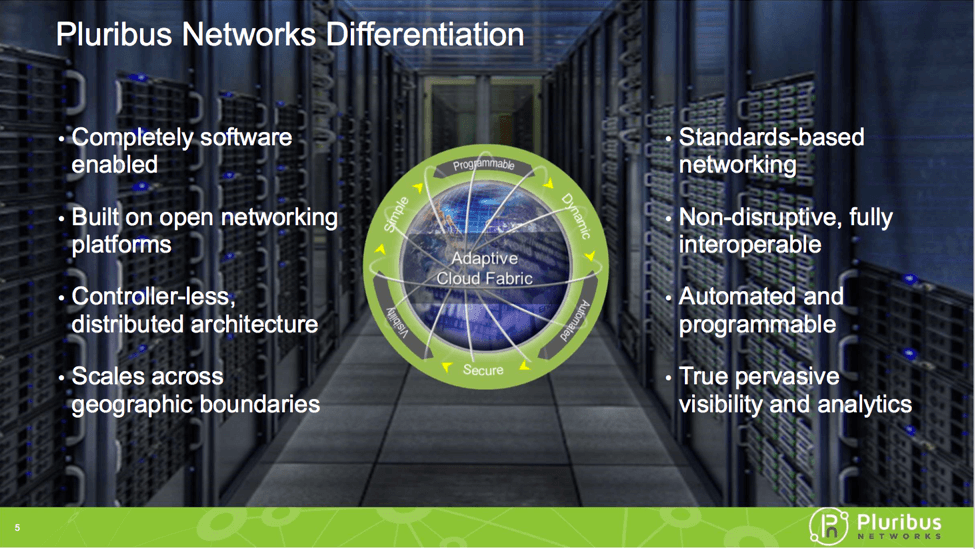

Pluribus Netvisor OS is a bit different from those just mentioned. It apparently used to be Solaris-based, and has pivoted onto Linux. Netvisor is controller-less, running in distributed form on white box switches, OpenCompute/ONIE-compliant. Switches supported include Dell EMC, Edgecore, Pluribus Freedom switches, and now D-Link.

To emphasize the distributed nature of Netvisor: You can issue CLI commands on any switch and they apply across the fabric of switches. Unlike some fabrics, you just need routing reachability for control, not, for example, dedicated management ports for the control network. So you can use Netvisor to control a bunch of leaf switches, and separately or not control the spine switches.

Pluribus thus gets points from me, for recognizing that we need to manage the fabric as one large switch, and get away from managing individual devices.

Netvisor tracks attached devices, via the vPort mechanism (virtual port). I suspect each switch learns what’s attached, and they either communicate that or have a way to query each other. My notes say “conversational forwarding, no broadcasts,” so I suspect the latter (query approach) applies. Although with ACI, you’d query the MAC/IP database – with Pluribus, is it a query to all the distributed database elements?

The fabric apparently auto extends to fabric-attached VMware ESX server clusters — vCenter integration with “seamless orchestration.”

Netvisor creates virtual network containers on the switches, each with its own virtualized router, providing segmentation. This leads to the “Adaptive Cloud Fabric.”

Netvisor is positioned for the datacenter, or racks/pods, or hyper-converged infrastructure, e.g. Nutanix, vSAN (vSAN aware), VxRail. It can now operate as an NSX hardware VTEP L2 gateway.

Pluribus talks about pervasive visibility into all traffic, all ports, without dedicate probes, with the ability to monitor TCP connections. That can feed into Pluribus’ Insight Analytics and VirtualWire services. All that sounded flow-centric to me, which is OK — but to me, NetFlow or Flow metadata is somewhat secondary to per-port data as problem indicators. I didn’t hear whether Pluribus can stream or otherwise collect or provide per-port bytes, packets, errors, discards type data as well — time constraints, etc. I suspect the vPort scheme collects some statistics — but how do I capture them over time, etc.?

Pluribus documentation requires a login. (I went to look for what third party switches are supported, can’t readily find that info. I did find the marketing page for which vendors. Short list.)

Bzzt, that costs points. I think vendors should (must!) provide open access to their documentation. Anything else defeats Google search, which (a) slows me down, which loses you points, and (b) is usually a heck of a lot better than whatever each vendor uses for indexing. Sigh.

Quick Pluribus Facts

- When there’s a failure, Netvisor doesn’t have to reconverge. Policies are known, shared, learned, remembered.

- But: Netvisor does 3-phase commit. OK, what about scaling that and 3-phase commit’s inability to recover from any control network segmentation? So no changes while the controller is down, devices “coast” (as-is), that’s common, but you have a distributed connectivity problem to resolve. Not so good, especially if WAN is involved? (So don’t do fabric across WAN, which is what my gut says is wise anyway).

- The management platform is Pluribus UNUM. Google search doesn’t work too well for that for obvious reasons … UNUM supports automated L2/L3 leaf/spine buildout. It does device and fabric management, plus topology visualization. It does the analytics and performance management as well.

- Pluribus does topology visualization, including other vendors’ switches. I don’t think I’ve seen this in the demos/slideware. You might watch the streaming videos to see if I’m being forgetful here.

- Netvisor has been tested to 40 switches in the fabric. (Number of ports?)

- It has been tested to 80-100 msec latency. However, doing any single control plane across the WAN is something I find deeply disturbing. Among other things: wide-scale failure domain?

- Is Netvisor doing OpenFlow under the hood? Do I care? Should I care? (I’m somewhat allergic to OpenFlow, I’ll spare you why.)

Bottom Line

- Netvisor is distributed, controller-less. Although if you want automation and GUI, that’s where UNUM comes in?

- Limited support for other vendors’ switches

- Netvisor does routing (VRF-like?) L3 segmentation, VLANs, and VXLAN. What type of VXLAN behavior is not totally clear, although the guess is the distributed controller knows all, shares the info, and fills in gaps with “conversational learning” (i.e., JIT lookups).

- Market niche: SMB to mid-size companies? (But do they need datacenter fabrics?) Value proposition as NSX underlay controller? Or?

As far as market niche, it seems like Pluribus fits as either an underlay controller for VMware, or for use with VMware in a fabric with significant remaining physical server presence. As with any multi-vendor product, support for any vendor’s switch depends on both the hardware and software versions being supported. Continuing support might be another consideration.

Reference Blogs

There’s some complementary information in a couple of the earlier #NFD16 blogs:

Comments

Comments are welcome, both in agreement or constructive disagreement about the above. I enjoy hearing from readers and carrying on deeper discussion via comments. Thanks in advance!